Project Overview

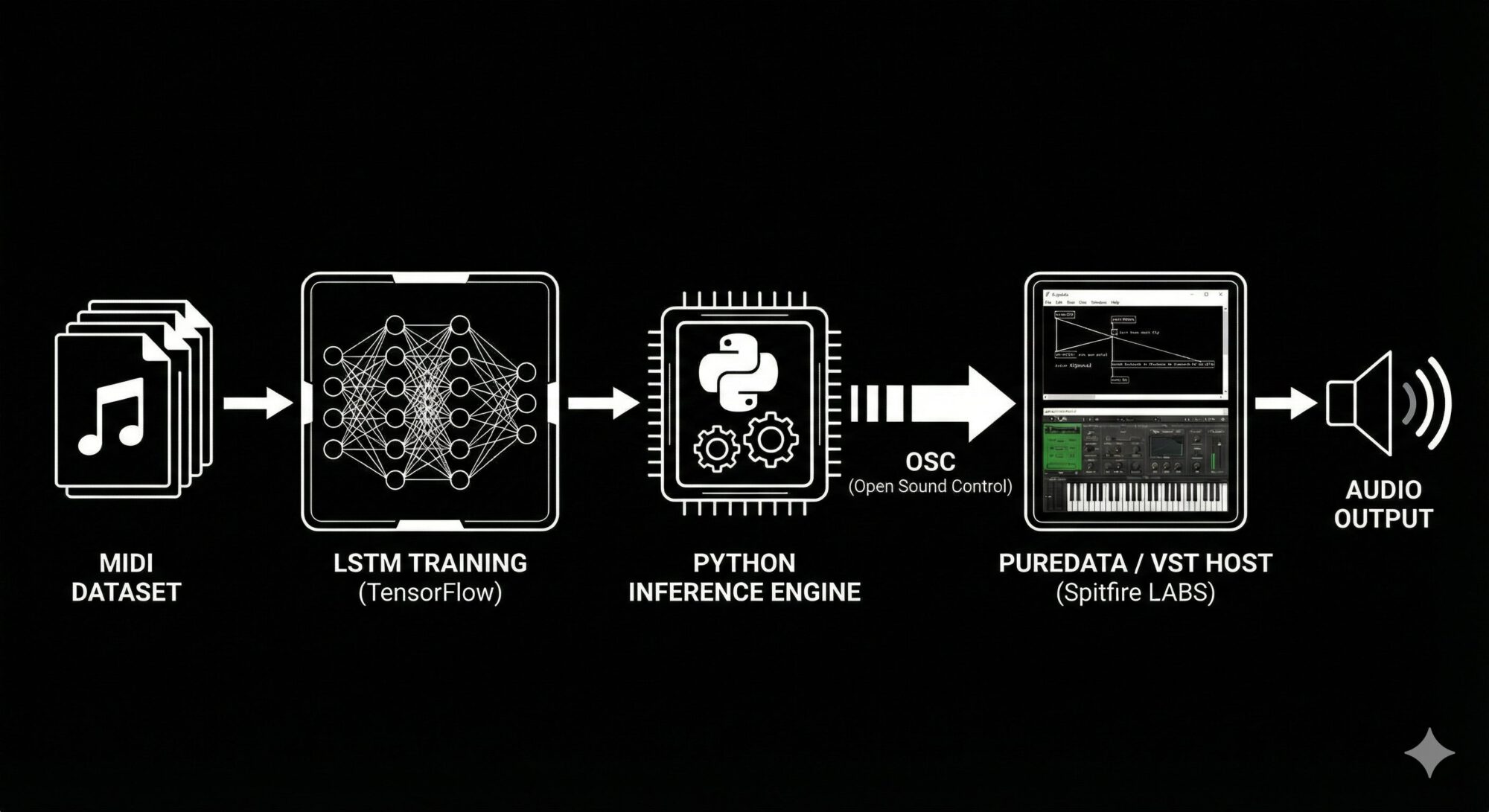

NeuralMIDI-Streamer is a real-time generative music system that bridges the gap between Deep Learning and live audio synthesis. Unlike static AI generation tools, this system functions as a responsive virtual instrument, using LSTM (Long Short-Term Memory) neural networks to generate polyphonic MIDI streams on the fly.

Trained on datasets of classical composers (e.g., Bach, Beethoven), the system now operates in two distinct modes: a high-performance Desktop Mode connecting Python to professional audio engines, and a highly accessible Web Mode running entirely client-side in the browser. Both versions feature a custom state-machine to handle complex voice allocation and allow the performer to modulate generation parameters—such as „Temperature“ (creativity) and „Density“ (sparsity)—live during performance.

Technical Features

- LSTM Architecture:Implementation of a recurrent neural network capable of learning and predicting polyphonic chord structures and time-based dependencies.

- Dual-Platform Pipeline:

- Desktop: A robust communication bridge via OSC between Python (inference) and PureData (hosting VST plugins via

vstplugin~). - Web: A fully client-side implementation using TensorFlow.js for inference and Tone.js for synthesis, eliminating the need for server-side processing.

- Desktop: A robust communication bridge via OSC between Python (inference) and PureData (hosting VST plugins via

- Live Modulation: Real-time control over stochastic parameters (entropy/density) and „Hot-Swapping“ capabilities to switch composer models (e.g., morphing from Bach to Beethoven) without audio interruption.

- Smart Voice Allocation: A custom dispatcher logic ensures note-off pairing and velocity smoothing to prevent „stuck notes“ and ensure organic phrasing across both platforms.

Tech Stack

- AI/ML: Python, TensorFlow, TensorFlow.js, NumPy

- Audio Engine: PureData (Desktop), Tone.js (Web), VST Plugins (Spitfire LABS)

- Protocols & Standards: Open Sound Control (OSC), Web Audio API